Using Computer Architecture for Trucking Logistics

Using Computer Architecture for trucking logistics

Disruption

Disruption has been the catalyst for the computing and IT industry. It seems professionals in these industries are never satisfied. It’s wasn’t enough to make desktop computing available to every home. No, engineers had to improve themselves by miniaturizing computer hardware as an all-in-one device, called the smartphone.

With the advent if the smartphone every human begin has access to one of the most disruptive infrastructures known to mankind; the internet. Nearly every person is on the internet sharing thoughts, pictures, news articles, etc. with individuals around the globe. The fundamental computer architecture comprised of the Central Processing Unit (CPU) and memory is the foundations for such disruption.

Congestion

High speed CPUs and memories are the reason we’re able to stream videos, take captivating photos, and conduct video conference calls effortlessly.

Data representing video or music, for example, travel as electrons over wires between the CPU and memory. The CPU requests data from memory and expects transfers to occur swiftly, without congestion, in order to provide a smooth movie or audio experience.

The assumption though is CPUs and memories have the same data transmit and receive capabilities. But this is false!

CPUs in smartphones process (theoretically) over 600 billion operations per second and transmit data across wires (theoretically) at a rate of 21.3 GB/s.

CPU speeds typically exceed the throughput capabilities of memory. The ratio of instructions per seconds eclipse the data transfer rate at a ratio of 28:1. Interestingly, this imbalance is still prevalent today.

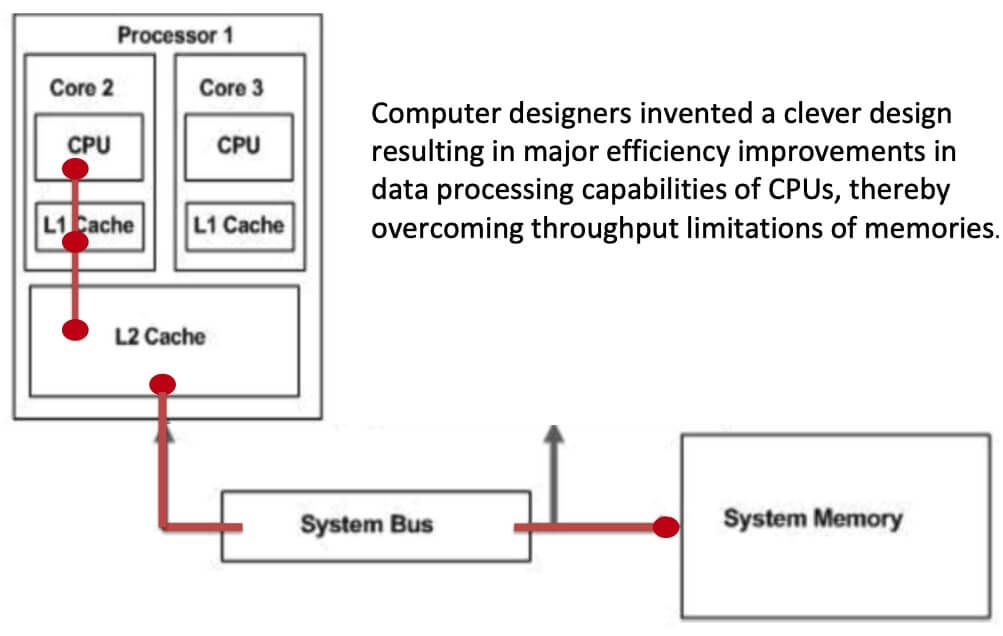

Congestion Solution

• Introduced in the mid 1990s

• Small memory banks located in close proximity to the CPU

• Stores information the CPU is most likely to need next

• Algorithms determine data to be cached

• cache data retrieved on-demand from the CPU

• Minimizes Trips to long range system memory

• Shorter Distance travel

L1/2 Cache

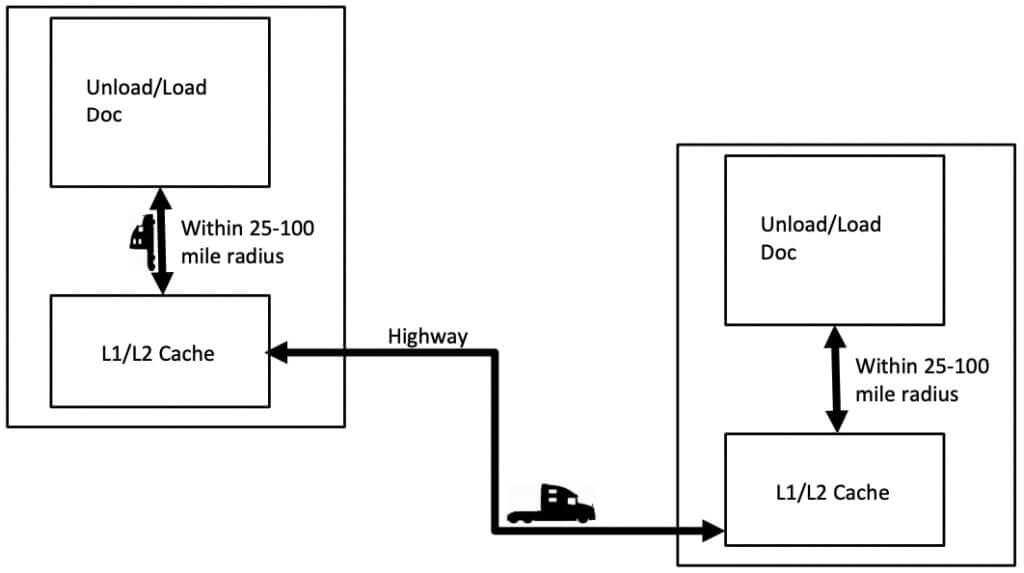

Interestingly, the trucking industry suffers from a similar imbalance between the high throughput capabilities of tractor trailers and limitations at the loading/unloading docks. In the case of trucking, the loading/unloading docks can be represented as the CPU (i.e. where payload is processed or handled) and trailer represent memory (i.e. where payload is stored). In contrast to the computer world, the loading/unloading docks (represented as CPU) are slower at processing payloads (data) in comparison to the delivery capabilities of trucks and trailers (memory).

How can L1/2 Caches techniques be applied to trucking logistics?

Could similar techniques alleviate long delays at loading/unloading docks?

Would this improve driver morale, wages and driver satisfaction; resulting in low driver turnover and shortage?

With the introduction of an advance drop-and-hook strategy, using the trailer cache concept, a proactive dispatch of loads to/from the shipper/receiver, can be implemented.

Design Requirements

• Cache layout is a simple secured paved lot.

• Truck cache would require development of new or existing land in remote areas.

• Numerous caches (100+) to be strategically built across major roads.

• Trailers are equipped with asset track devices

• Employment of short-range (25-100 miles) drivers to transport trailers to/from hubs and caches.

• Implementation of advanced algorithms to predict when next trailer should be dispatched from cache (or delivered to cache) or if caching is required (i.e delivery directly to hub)

• Collaborative workforce of Owner Operators

Logistics with Caching